Why the Singularity Narrative Harms Real Progress

In recent years, public discourse around artificial intelligence has increasingly drifted from engineering reality toward philosophical spectacle. Leaders like Sam Altman have amplified narratives of an impending “gentle singularity,” where AI transcends human intelligence and ushers in a transformative era. While such storytelling captures the imagination, it also distorts the perception of where AI truly stands today — and, more dangerously, how it actually works.

As someone who builds with these systems daily, I feel a growing frustration: this mythology of AI as magic risks undermining the very real, grounded engineering work required to make AI useful, reliable, and safe. It shifts focus from the tangible to the fantastical, from architecture to ideology. And in doing so, it creates unrealistic expectations among key stakeholders — executives, investors, policymakers, and users.

This article is a reality check. Not to dismiss ambition, but to re-anchor our collective vision of AI to what actually drives progress: mathematics, engineering, and iteration.

The Problem with “The Singularity”

Let’s start with the core idea: the singularity. A hypothetical future where artificial general intelligence (AGI) surpasses human cognition, triggering a cascade of exponential self-improvement. This is the ideological backdrop against which much of Silicon Valley’s AI rhetoric operates. The most optimistic versions suggest benevolent outcomes — the end of disease, poverty, even death itself. The darker versions spin into warnings of AI overlords or alignment catastrophes. But in practice, this framing is disconnected from how AI is actually built. Most AI today — including the most powerful large language models — are narrow statistical systems. They perform well on specific tasks under well-defined conditions. They are not general intelligences. They do not reason, infer intent, or understand causality in the human sense. They are trained on vast data, optimize objective functions, and exhibit emergent behaviors that, while impressive, remain brittle under novel or adversarial conditions.

Yet public narratives leapfrog these facts. They promote a vision of AI that solves everything — education, healthcare, energy, creativity — without acknowledging that each domain comes with its own set of structural, ethical, and technical complexities that no single model can overcome in isolation. This isn’t just premature. It’s strategically damaging.

AI Is a Tool, Not a Prophet

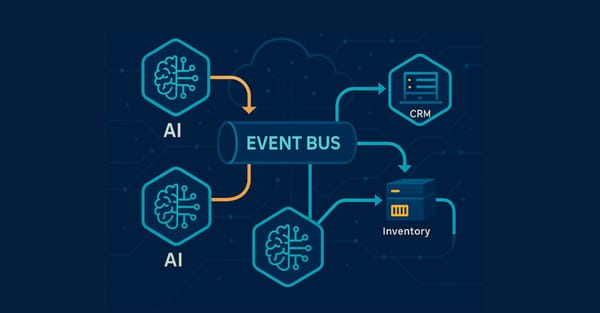

Technologists know this. We work within constraints every day: limited compute budgets, biased datasets, error propagation, hallucination risks, latency-performance tradeoffs. We know that deploying a chatbot to a million users involves more than training a model — it requires orchestration layers, observability, rate limiting, feedback loops, and human-in-the-loop oversight.

But when the dominant narrative suggests that AGI is just around the corner — and that its arrival will resolve these problems retroactively — we stop asking the hard questions that actually move the field forward.

Questions like:

- How do we make AI systems interpretable and trustworthy at scale?

- How do we ensure data provenance and model accountability?

- What architectural decisions make fine-tuning sustainable for real-world applications?

- What are the security boundaries of language models in production environments?

Instead, we are subjected to philosophical debates about machine consciousness, or worse, regulatory overreach based on speculative threats rather than current capabilities. Meanwhile, practitioners are still trying to address fundamental issues, such as token-length truncation and context window limitations.

The risk is clear: when we treat AI like prophecy instead of a toolchain, we misallocate attention and resources. The result is hype-driven development, unrealistic executive expectations, and disillusionment when models fail to perform as expected in practice.

Every New Model Is a Patch, Not a Portal

Every time a new model is released — whether GPT-4, Claude, Gemini, or LLaMA — public discourse treats it like a portal to the future. The headlines scream about breakthroughs. But under the hood, the real story is far more incremental.

Each release is a patch over the limitations of the last one. Larger context windows. Better alignment tuning. More efficient inference. Less hallucination. Improved multilingual performance. These are meaningful, valuable steps — but they are not singularities. They are part of a long, iterative climb toward utility, robustness, and specialization.

What matters isn’t the metaphor — it’s the margin. Is the model producing fewer hallucinations on medical content? Can it support multimodal workflows with latency under 100ms? Is it stable under edge conditions? These are the metrics that determine real-world value.

And these gains don’t happen in isolation. They require massive infrastructure coordination, new evaluation benchmarks, optimized runtimes, scalable feedback collection, and deeply human labor. That’s not magic. That’s engineering.

Real Progress Requires Realism

If we want AI to transform industries, such as logistics, education, law, and scientific research, we need to invest less in mythology and more in methodology.

We need executive teams to understand that AI is a cost center before it’s a value center. Those models don’t self-deploy. That prompt engineering is not enough. That real impact comes from aligning models with workflows, integrating them into legacy systems, and monitoring for failure, not because the models are broken, but because the world is.

We need investors to fund both infrastructure and innovation. We need regulators to base frameworks on current capabilities, not speculative scenarios. We need researchers to focus not just on larger models, but more capable ones — ones capable of adaptation, reasoning over time, and grounding their answers in verifiable reality.

Above all, we need the public and tech leaders to appreciate that AI doesn’t “just work.” It works because people make it work, one step, one experiment, one release at a time.

Ambition Without Illusion

I’m not arguing against ambition. The dream of building AI that helps humans reason better, create faster, and live healthier is profoundly motivating. But ambition should not require illusion.

The “gentle singularity” is a compelling story. But it’s just that — a story. The reality is far more complex and intriguing. It’s the story of engineers solving problems, researchers refining assumptions, companies aligning incentives, and users testing limits.

AI’s future is being built in code, not prophecy. And if we’re honest about that, we can build something far more powerful than magic: we can build trust.

Member discussion