The AI landscape rapidly evolves beyond isolated, single-purpose agents toward rich ecosystems of specialized agents collaborating to solve complex problems. Google's Agent-to-Agent (A2A) protocol represents a significant step toward standardizing how these agents communicate. However, implementing it at scale requires more than just the protocol itself—it demands a robust, event-driven architecture that can support complex interactions between multiple specialized agents.

This article introduces a novel approach to implementing a serverless event-driven agent architecture (EDAA) using Google's A2A protocol with AWS services. We'll explore how to leverage AWS EventBridge, SQS, Lambda, Fargate, and other serverless components to create a scalable, resilient, and cost-effective agent ecosystem. To demonstrate these concepts, we'll build a practical legal contract analysis system where specialized agents collaborate to create a comprehensive graph of contract relationships with other contracts and relevant laws.

By the end of this article, you'll understand how to implement Google's A2A protocol in a serverless AWS environment, design an event-driven architecture using AWS EventBridge and SQS, deploy agents using Lambda and Fargate, leverage Amazon Bedrock for AI capabilities, integrate the Model Context Protocol (MCP) for external API access, implement authentication and security best practices, and build a complete, production-ready agent ecosystem using AWS CDK.

The Evolution of Agent Communication

The current AI agent landscape resembles the early web: fragmented and siloed. Agents built on different frameworks often can't communicate effectively. Each is powerful within its ecosystem but isolated from others. Google's A2A protocol aims to solve this by providing a standardized way for agents to announce their capabilities via Agent Cards, exchange structured messages, manage tasks and their lifecycles, and share artifacts and results. However, the A2A protocol alone doesn't address the communication infrastructure needed for complex agent ecosystems.

The current A2A implementation relies on traditional web patterns (HTTP, JSON-RPC, and Server-Sent Events) for direct, point-to-point communication. While functional for simple interactions, this approach has significant limitations at scale. It creates an explosion of N²-N possible integrations for N agents, making the system brittle and complex. Each agent needs to know its peers' exact endpoint, format, and availability, creating tight coupling where if one agent goes down or changes, others break. Point-to-point communication is also inherently private, making it difficult to log, monitor, trace, or replay messages for debugging or compliance. Additionally, multi-agent workflows require separate orchestration layers to manage the flow across systems.

What's missing is a shared, asynchronous backbone where agents can publish what they know and subscribe to what they need—a way to decouple producers and consumers of intelligence. This architectural pattern should sound familiar. It's the same evolution we saw in software architecture: from monoliths where all functionality lived in a single codebase, to microservices with direct communication that still relied on synchronous communication creating complex webs of dependencies, to event-driven microservices where services publish events to a shared broker and others subscribe to events they care about, reducing dependencies from quadratic (NxM) to linear (N+M). The A2A protocol is at stage 2 and needs to evolve to stage 3 with an event-driven backbone.

AWS Services for Event-Driven Agent Architecture

AWS provides a rich ecosystem of services that can be leveraged to build a serverless, event-driven agent architecture. At the heart of our architecture is AWS EventBridge, a serverless event bus that makes it easy to connect applications together using data from your own applications, integrated software as a service (SaaS) applications, and AWS services. It's the perfect backbone for our event-driven agent architecture because it provides a central event bus for all agent communications, supports complex event filtering and routing, integrates natively with other AWS services, scales automatically to handle any volume of events, offers built-in reliability and durability, and provides a pay-per-use pricing model.

While EventBridge is excellent for event routing, Amazon SQS (Simple Queue Service) provides reliable, highly scalable hosted queues for storing messages as they travel between agents. SQS complements EventBridge by providing message buffering to handle traffic spikes, ensuring at-least-once delivery of messages, supporting dead-letter queues for handling failed processing, enabling message batching for improved efficiency, and offering FIFO (First-In-First-Out) queues when message ordering is essential.

AWS Lambda lets you run code without provisioning or managing servers to implement our agents. It's ideal for implementing agents that need to respond to events quickly, have variable or unpredictable workloads, process events statelessly, and require automatic scaling from zero to peak demand. For agents that require more resources, longer running times, or stateful processing, AWS Fargate provides serverless computing for containers. Fargate is perfect for complex agents with larger memory or CPU requirements, agents that maintain state between requests, workloads that benefit from container packaging, and applications that need to expose HTTP endpoints (like A2A servers).

To provide AI capabilities, Amazon Bedrock is a fully managed service that makes high-performing foundation models from leading AI companies available through an API. It's ideal for implementing AI capabilities within agents, analyzing text, generating content, and extracting insights, with access to models like Claude 3.7 Sonnet without managing infrastructure.

For complex workflows involving multiple agents, AWS Step Functions provides a serverless orchestration service that makes coordinating the components of distributed applications easy. It helps define complex agent interaction patterns, manage state transitions in multi-step processes, implement retry logic and error handling, and visualize workflow execution.

Amazon DynamoDB is a fast, flexible NoSQL database service with single-digit millisecond performance at any scale. It's perfect for storing agent state and task information, tracking relationships between entities, implementing event sourcing patterns, and providing high-performance, low-latency data access.

For security, AWS Secrets Manager helps you protect access to your applications, services, and IT resources without the upfront cost and complexity of managing your secrets management infrastructure. It's essential for securely storing API keys and credentials, managing access to external services, rotating credentials automatically, and controlling access to sensitive information.

Example: Legal Contract Analysis System with EDAA

While developing agents for LIDIA, a SaaS platform for legal technology applications, we encountered a representative use case that validated the architecture: a system for legal contract analysis that constructs a graph of relationships between contracts and applicable laws. The implementation involved three specialized agents: a Legal Contract Agent, responsible for parsing contracts and coordinating the workflow; a Document Retrieval Agent, tasked with identifying similar contracts from a repository; and a Legal Knowledge Base Agent, which locates relevant laws and regulations for each contract clause..

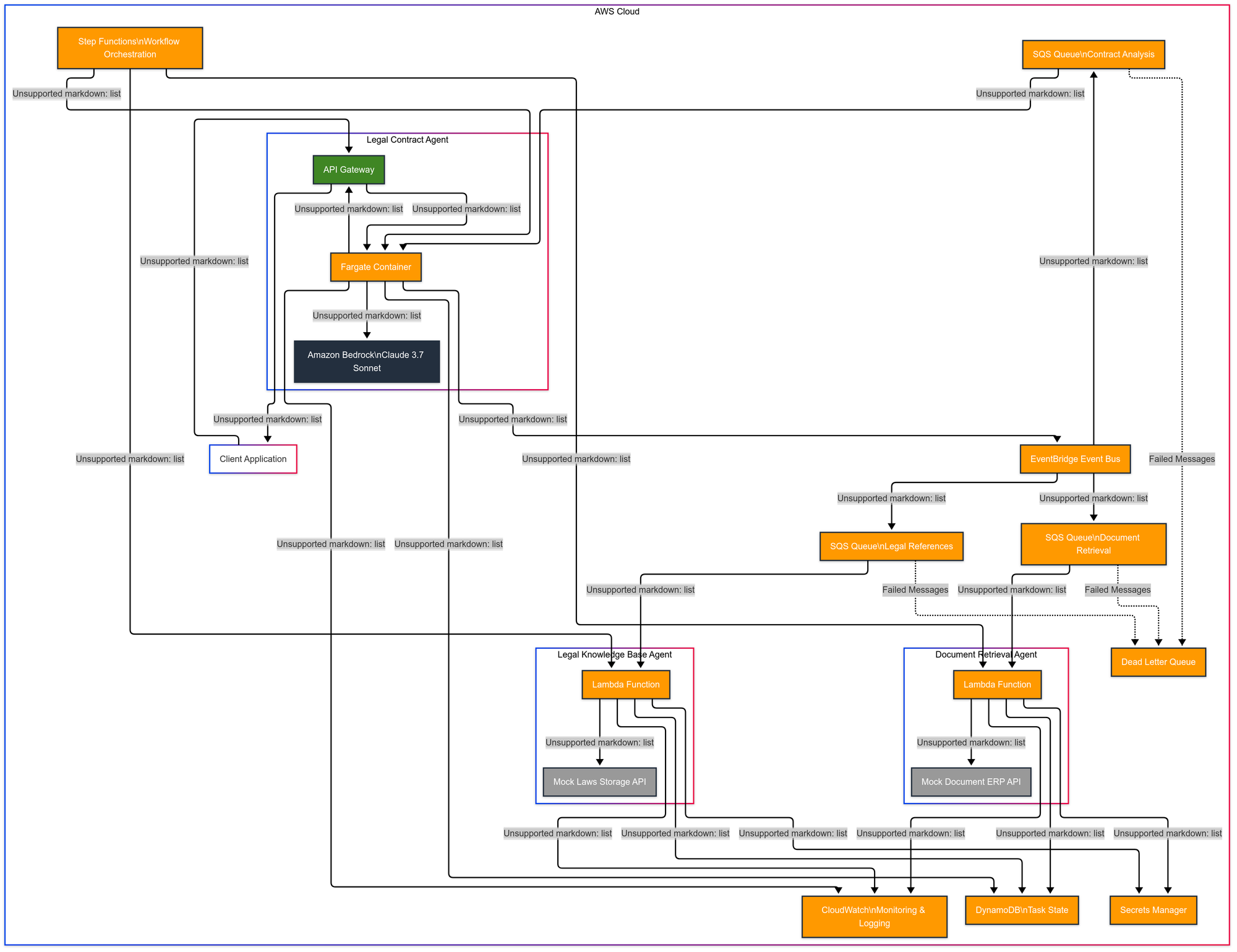

Our architecture leverages multiple AWS services to create a robust, scalable system. EventBridge serves as the central nervous system, routing events between agents. SQS Queues buffer events for each agent type, providing resilience against traffic spikes and ensuring reliable delivery. A Dead Letter Queue (DLQ) captures failed message processing attempts for later analysis and reprocessing. DynamoDB stores task states and intermediate results, enabling agents to work asynchronously. Step Functions orchestrates complex workflows across multiple agents, managing state transitions and error handling. CloudWatch provides comprehensive monitoring and logging for the entire system. Secrets Manager securely stores and manages API keys and credentials for external services. API Gateway exposes the Legal Contract Agent's A2A endpoints to external clients. Fargate runs the Legal Contract Agent as a containerized application with its own HTTP server. Lambda functions implement the Document Retrieval and Legal Knowledge Base agents, scaling automatically based on event volume. And Bedrock provides AI capabilities through Claude 3.7 Sonnet for contract analysis.

Here's the overall architecture of our system:

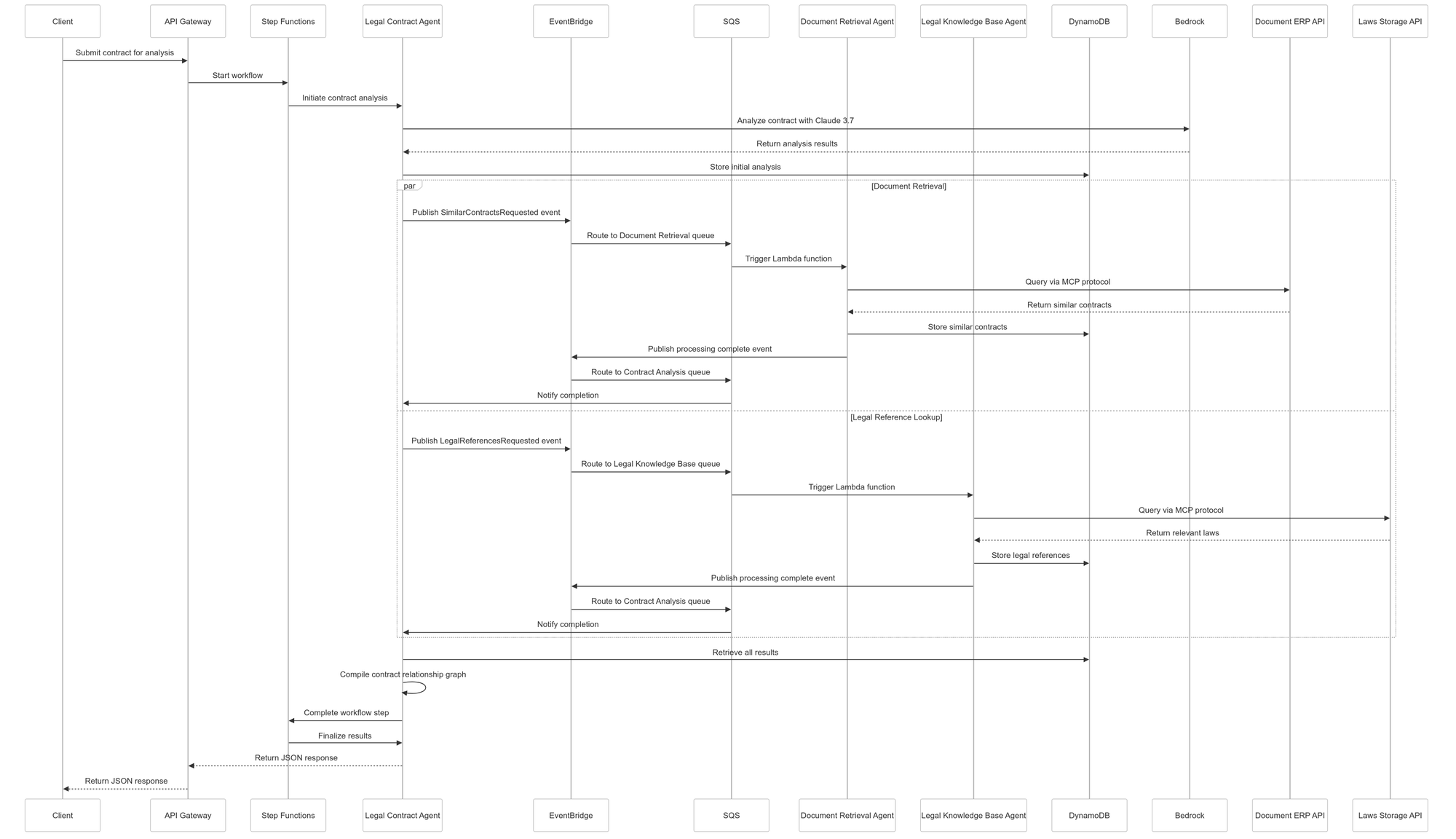

The interaction flow between these agents is complex but well-orchestrated.

Interaction Flow

The sequence diagram below illustrates how these agents interact:

- When a client submits a contract for analysis, the request is forwarded to the Legal Contract Agent running on Fargate through API Gateway.

- This agent analyzes the contract using Claude 3.7 Sonnet via Amazon Bedrock, then publishes events to EventBridge requesting similar contracts and legal references.

- These events are routed to SQS queues, which trigger the Document Retrieval Agent and Legal Knowledge Base Agent Lambda functions.

- These agents query external systems via the MCP protocol, store their results in DynamoDB, and publish completion events back to EventBridge.

- The Legal Contract Agent

- receives these events, retrieves all results from DynamoDB

- compiles the contract relationship graph

- returns the final JSON response to the client

This entire workflow is orchestrated by Step Functions, which manage the state transitions and ensure that all steps are completed successfully. If any step fails, the workflow can be retried, or alternative paths can be taken. CloudWatch monitors the system, providing logs, metrics, and alarms to ensure everything runs smoothly. Secrets Manager securely stores and manages the credentials to access the external document repository and legal database.

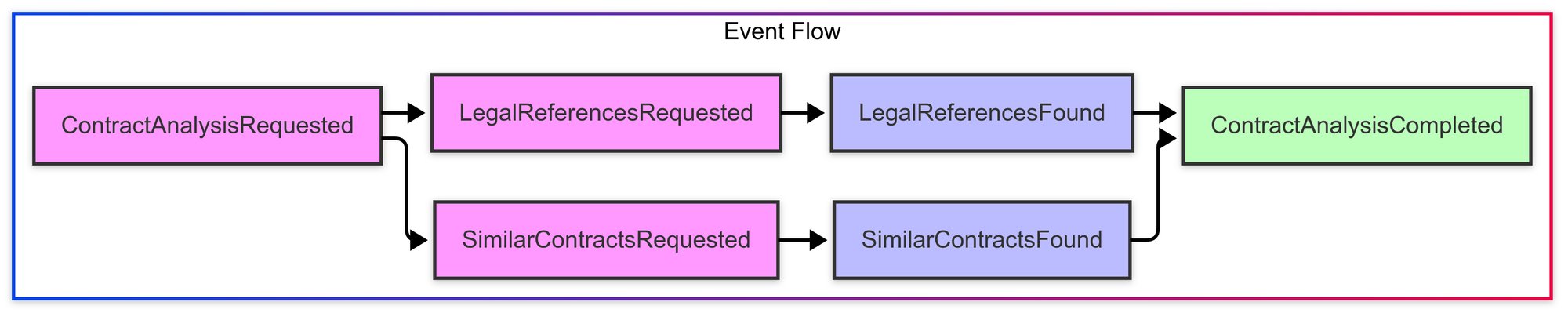

Event Flow

The system uses a series of events to coordinate work between agents:

The system uses a series of events to coordinate work between agents, flowing from ContractAnalysisRequested to SimilarContractsRequested and LegalReferencesRequested, then to SimilarContractsFound and LegalReferencesFound, and finally to ContractAnalysisCompleted. This event-driven approach decouples the agents, allowing them to evolve independently and scale based on their specific workloads.

Implementation Details

Let's examine each component's implementation details, starting with the common types that define our A2A protocol implementation and EventBridge events.

Types for A2A Protocol and EventBridge Events

These types include structures for agent cards, tasks, messages, artifacts, event details, and the output graph format. They provide a strong typing foundation for our TypeScript implementation, ensuring consistency across all components.

// Types for A2A Protocol and EventBridge Events

// Common types for A2A Protocol

export interface AgentCard {

name: string;

description: string;

version: string;

capabilities: string[];

endpoint: string;

authentication: {

type: "aws_iam" | "api_key" | "none";

details?: Record<string, any>;

};

}

export type TaskStatus =

| "submitted"

| "working"

| "input-required"

| "completed"

| "failed"

| "canceled";

export interface Task {

id: string;

status: TaskStatus;

messages: Message[];

artifacts: Artifact[];

created_at: string;

updated_at: string;

}

export interface Message {

role: "user" | "agent";

parts: Part[];

created_at: string;

}

export type Part = TextPart | FilePart | DataPart;

export interface TextPart {

type: "text";

content: string;

}

export interface FilePart {

type: "file";

content_type: string;

content: string | null;

uri?: string;

}

export interface DataPart {

type: "data";

content_type: "application/json";

content: Record<string, any>;

}

export interface Artifact {

id: string;

type: string;

parts: Part[];

created_at: string;

}

// EventBridge Event Types

export interface EventBridgeEvent<T> {

version: string;

id: string;

"detail-type": string;

source: string;

account: string;

time: string;

region: string;

resources: string[];

detail: T;

}

// Event Detail Types

export interface ContractAnalysisRequestedDetail {

taskId: string;

contractId: string;

contractContent: string;

requesterId: string;

}

export interface SimilarContractsRequestedDetail {

taskId: string;

contractId: string;

keyTerms: string[];

limit: number;

}

export interface SimilarContractsFoundDetail {

taskId: string;

contractId: string;

similarContracts: SimilarContract[];

}

export interface SimilarContract {

id: string;

title: string;

similarityScore: number;

relationshipType: string;

keyDifferences: string[];

}

export interface LegalReferencesRequestedDetail {

taskId: string;

contractId: string;

clauses: {

id: string;

text: string;

}[];

}

export interface LegalReferencesFoundDetail {

taskId: string;

contractId: string;

legalReferences: LegalReference[];

}

export interface LegalReference {

id: string;

title: string;

jurisdiction: string;

relevanceScore: number;

summary: string;

citation: string;

}

export interface ContractAnalysisCompletedDetail {

taskId: string;

contractId: string;

result: ContractRelationshipGraph;

}

// Output Graph Format

export interface ContractRelationshipGraph {

contract: {

id: string;

title: string;

parties: string[];

date: string;

key_clauses: {

id: string;

title: string;

text: string;

}[];

};

related_contracts: {

id: string;

title: string;

similarity_score: number;

relationship_type: string;

key_differences: string[];

}[];

related_laws: {

id: string;

title: string;

jurisdiction: string;

relevance_score: number;

summary: string;

citation: string;

}[];

}

// DynamoDB Types

export interface TaskStateItem {

taskId: string; // Partition key

itemType: string; // Sort key - can be "task", "contract", "similar_contract", or "legal_reference"

data: string; // JSON stringified data

timestamp: number; // For sorting/TTL

}

// Step Functions Types

export interface StepFunctionsInput {

taskId: string;

contractId: string;

contractContent: string;

requesterId: string;

}

export interface StepFunctionsOutput {

taskId: string;

contractId: string;

result: ContractRelationshipGraph;

status: "success" | "failure";

error?: string;

}

// SQS Message Types

export interface SQSMessage<T> {

messageId: string;

receiptHandle: string;

body: string; // JSON stringified EventBridgeEvent<T>

attributes: Record<string, string>;

messageAttributes: Record<string, {

dataType: string;

stringValue?: string;

binaryValue?: Buffer;

}>;

md5OfBody: string;

eventSource: string;

eventSourceARN: string;

awsRegion: string;

}

Legal Contract Agent Implementation

The Legal Contract Agent is the primary entry point for user requests. It's implemented as a Fargate container that exposes A2A protocol endpoints, analyzes contracts using Claude 3.7 Sonnet via Amazon Bedrock, and orchestrates agent workflow. This agent handles incoming requests, stores task states in DynamoDB, starts Step Functions workflows, processes SQS messages, and compiles the final contract relationship graph. It's a complex component that ties together all the other pieces of our system.

// Legal Contract Agent Implementation

import { EventBridgeClient, PutEventsCommand } from '@aws-sdk/client-eventbridge';

import { BedrockRuntimeClient, InvokeModelCommand } from '@aws-sdk/client-bedrock-runtime';

import { DynamoDBClient } from '@aws-sdk/client-dynamodb';

import { DynamoDBDocumentClient, PutCommand, GetCommand, QueryCommand } from '@aws-sdk/lib-dynamodb';

import { SQSClient, ReceiveMessageCommand, DeleteMessageCommand } from '@aws-sdk/client-sqs';

import { SFNClient, StartExecutionCommand, SendTaskSuccessCommand } from '@aws-sdk/client-sfn';

import express from 'express';

import { v4 as uuidv4 } from 'uuid';

import {

Task, Message, TextPart, ContractAnalysisRequestedDetail,

SimilarContractsFoundDetail, LegalReferencesFoundDetail,

ContractRelationshipGraph, TaskStateItem, SQSMessage,

StepFunctionsInput, StepFunctionsOutput

} from './types';

// Initialize AWS clients

const eventBridgeClient = new EventBridgeClient({ region: 'us-east-1' });

const bedrockClient = new BedrockRuntimeClient({ region: 'us-east-1' });

const dynamoClient = new DynamoDBClient({ region: 'us-east-1' });

const docClient = DynamoDBDocumentClient.from(dynamoClient);

const sqsClient = new SQSClient({ region: 'us-east-1' });

const sfnClient = new SFNClient({ region: 'us-east-1' });

// Configuration constants

const EVENT_BUS_NAME = 'LegalContractAnalysisEventBus';

const TASK_TABLE_NAME = 'LegalContractTaskState';

const CONTRACT_ANALYSIS_QUEUE_URL = process.env.CONTRACT_ANALYSIS_QUEUE_URL || '';

const STEP_FUNCTIONS_STATE_MACHINE_ARN = process.env.STEP_FUNCTIONS_STATE_MACHINE_ARN || '';

// A2A Server implementation for Legal Contract Agent

export class LegalContractAgent {

private tasks: Map<string, Task> = new Map();

private isProcessingQueue: boolean = false;

constructor() {

// Initialize Express server for A2A endpoints

const app = express();

app.use(express.json());

// A2A Protocol endpoints

app.get('/.well-known/agent.json', this.getAgentCard);

app.post('/tasks/send', this.handleTaskSend);

app.get('/tasks/:taskId', this.getTaskStatus);

app.post('/tasks/:taskId/cancel', this.cancelTask);

// Step Functions callback endpoints

app.post('/step-functions/callback', this.handleStepFunctionsCallback);

// Start server

const port = process.env.PORT || 3000;

app.listen(port, () => {

console.log(`Legal Contract Agent running on port ${port}`);

});

// Start SQS message polling

this.startQueueProcessing();

}

// Return the Agent Card as per A2A protocol

private getAgentCard = (req: express.Request, res: express.Response) => {

res.json({

name: 'Legal Contract Analysis Agent',

description: 'Analyzes legal contracts and finds relationships with other contracts and laws',

version: '1.0.0',

capabilities: ['contract_analysis', 'streaming'],

endpoint: process.env.AGENT_ENDPOINT || 'http://localhost:3000',

authentication: {

type: 'aws_iam'

}

}) ;

};

// Handle incoming task requests

private handleTaskSend = async (req: express.Request, res: express.Response) => {

try {

const { message } = req.body;

const taskId = req.body.taskId || uuidv4();

// Create a new task

const task: Task = {

id: taskId,

status: 'submitted',

messages: [message],

artifacts: [],

created_at: new Date().toISOString(),

updated_at: new Date().toISOString()

};

this.tasks.set(taskId, task);

// Store task in DynamoDB

await this.storeTaskState(taskId, "task", task);

// Start Step Functions workflow

const contractContent = this.extractContractContent(message);

if (!contractContent) {

throw new Error('No contract content found in message');

}

const contractId = uuidv4();

const requesterId = req.body.requesterId || 'anonymous';

await this.startStepFunctionsWorkflow(taskId, contractId, contractContent, requesterId);

// Return the task immediately

res.json({ task });

} catch (error) {

console.error('Error handling task:', error);

res.status(500).json({ error: 'Failed to process task' });

}

};

// Get status of an existing task

private getTaskStatus = async (req: express.Request, res: express.Response) => {

const { taskId } = req.params;

try {

// Try to get from memory first

let task = this.tasks.get(taskId);

// If not in memory, try to get from DynamoDB

if (!task) {

const result = await docClient.send(new GetCommand({

TableName: TASK_TABLE_NAME,

Key: {

taskId: taskId,

itemType: "task"

}

}));

if (result.Item) {

task = JSON.parse(result.Item.data);

this.tasks.set(taskId, task);

}

}

if (!task) {

return res.status(404).json({ error: 'Task not found' });

}

res.json({ task });

} catch (error) {

console.error('Error getting task status:', error);

res.status(500).json({ error: 'Failed to get task status' });

}

};

// Cancel an existing task

private cancelTask = async (req: express.Request, res: express.Response) => {

const { taskId } = req.params;

try {

// Try to get from memory first

let task = this.tasks.get(taskId);

// If not in memory, try to get from DynamoDB

if (!task) {

const result = await docClient.send(new GetCommand({

TableName: TASK_TABLE_NAME,

Key: {

taskId: taskId,

itemType: "task"

}

}));

if (result.Item) {

task = JSON.parse(result.Item.data);

this.tasks.set(taskId, task);

}

}

if (!task) {

return res.status(404).json({ error: 'Task not found' });

}

// Update task status

task.status = 'canceled';

task.updated_at = new Date().toISOString();

// Store updated task in DynamoDB

await this.storeTaskState(taskId, "task", task);

res.json({ task });

} catch (error) {

console.error('Error canceling task:', error);

res.status(500).json({ error: 'Failed to cancel task' });

}

};

// Handle Step Functions callback

private handleStepFunctionsCallback = async (req: express.Request, res: express.Response) => {

try {

const { taskToken, output } = req.body;

// Send task success to Step Functions

await sfnClient.send(new SendTaskSuccessCommand({

taskToken,

output: JSON.stringify(output)

}));

res.json({ success: true });

} catch (error) {

console.error('Error handling Step Functions callback:', error);

res.status(500).json({ error: 'Failed to handle Step Functions callback' });

}

};

// Start Step Functions workflow

private async startStepFunctionsWorkflow(

taskId: string,

contractId: string,

contractContent: string,

requesterId: string

) {

const input: StepFunctionsInput = {

taskId,

contractId,

contractContent,

requesterId

};

await sfnClient.send(new StartExecutionCommand({

stateMachineArn: STEP_FUNCTIONS_STATE_MACHINE_ARN,

name: `contract-analysis-${taskId}`,

input: JSON.stringify(input)

}));

}

// Start SQS message polling

private startQueueProcessing() {

if (this.isProcessingQueue) return;

this.isProcessingQueue = true;

this.pollQueue();

}

// Poll SQS queue for messages

private async pollQueue() {

while (this.isProcessingQueue) {

try {

const response = await sqsClient.send(new ReceiveMessageCommand({

QueueUrl: CONTRACT_ANALYSIS_QUEUE_URL,

MaxNumberOfMessages: 10,

WaitTimeSeconds: 20

}));

if (response.Messages && response.Messages.length > 0) {

for (const message of response.Messages) {

await this.processQueueMessage(message);

// Delete message from queue

await sqsClient.send(new DeleteMessageCommand({

QueueUrl: CONTRACT_ANALYSIS_QUEUE_URL,

ReceiptHandle: message.ReceiptHandle

}));

}

}

} catch (error) {

console.error('Error polling SQS queue:', error);

// Wait before retrying

await new Promise(resolve => setTimeout(resolve, 5000));

}

}

}

// Process SQS message

private async processQueueMessage(message: any) {

try {

const body = JSON.parse(message.Body);

const detailType = body['detail-type'];

switch (detailType) {

case 'SimilarContractsFound':

await this.handleSimilarContractsFound(body.detail);

break;

case 'LegalReferencesFound':

await this.handleLegalReferencesFound(body.detail);

break;

case 'ContractAnalysisCompleted':

await this.handleContractAnalysisCompleted(body.detail);

break;

default:

console.warn(`Unknown detail type: ${detailType}`);

}

} catch (error) {

console.error('Error processing SQS message:', error);

}

}

// Handle SimilarContractsFound event

private async handleSimilarContractsFound(detail: SimilarContractsFoundDetail) {

const { taskId, contractId, similarContracts } = detail;

// Store similar contracts in DynamoDB

await this.storeTaskState(taskId, "similar_contracts", similarContracts);

// Check if we can complete the task

await this.checkTaskCompletion(taskId, contractId);

}

// Handle LegalReferencesFound event

private async handleLegalReferencesFound(detail: LegalReferencesFoundDetail) {

const { taskId, contractId, legalReferences } = detail;

// Store legal references in DynamoDB

await this.storeTaskState(taskId, "legal_references", legalReferences);

// Check if we can complete the task

await this.checkTaskCompletion(taskId, contractId);

}

// Handle ContractAnalysisCompleted event

private async handleContractAnalysisCompleted(detail: ContractAnalysisCompletedDetail) {

const { taskId, result } = detail;

// Get task from memory or DynamoDB

let task = this.tasks.get(taskId);

if (!task) {

const result = await docClient.send(new GetCommand({

TableName: TASK_TABLE_NAME,

Key: {

taskId: taskId,

itemType: "task"

}

}));

if (result.Item) {

task = JSON.parse(result.Item.data);

this.tasks.set(taskId, task);

}

}

if (!task) {

console.error(`Task ${taskId} not found`);

return;

}

// Update task with final result

task.status = 'completed';

task.updated_at = new Date().toISOString();

task.artifacts.push({

id: uuidv4(),

type: 'contract_relationship_graph',

parts: [{

type: 'data',

content_type: 'application/json',

content: result

}],

created_at: new Date().toISOString()

});

// Store updated task in DynamoDB

await this.storeTaskState(taskId, "task", task);

}

// Check if all required data is available to complete the task

private async checkTaskCompletion(taskId: string, contractId: string) {

try {

// Get contract analysis

const contractResult = await docClient.send(new GetCommand({

TableName: TASK_TABLE_NAME,

Key: {

taskId: taskId,

itemType: "contract"

}

}));

if (!contractResult.Item) {

return; // Contract analysis not available yet

}

const contractAnalysis = JSON.parse(contractResult.Item.data);

// Get similar contracts

const similarContractsResult = await docClient.send(new GetCommand({

TableName: TASK_TABLE_NAME,

Key: {

taskId: taskId,

itemType: "similar_contracts"

}

}));

if (!similarContractsResult.Item) {

return; // Similar contracts not available yet

}

const similarContracts = JSON.parse(similarContractsResult.Item.data);

// Get legal references

const legalReferencesResult = await docClient.send(new GetCommand({

TableName: TASK_TABLE_NAME,

Key: {

taskId: taskId,

itemType: "legal_references"

}

}));

if (!legalReferencesResult.Item) {

return; // Legal references not available yet

}

const legalReferences = JSON.parse(legalReferencesResult.Item.data);

// Compile final results

const finalResult = this.compileResults(contractId, contractAnalysis, similarContracts, legalReferences);

// Publish completion event

await this.publishCompletionEvent(taskId, contractId, finalResult);

} catch (error) {

console.error('Error checking task completion:', error);

}

}

// Extract contract content from message

private extractContractContent(message: Message): string | null {

for (const part of message.parts) {

if (part.type === 'text') {

return part.content;

}

}

return null;

}

// Analyze contract using Amazon Bedrock with Claude 3.7 Sonnet

async analyzeContractWithBedrock(contractContent: string) {

const prompt = `

Please analyze the following legal contract and extract:

1. Contract title

2. Parties involved

3. Contract date

4. Key clauses (with titles and text)

Contract:

${contractContent}

Provide the analysis in JSON format.

`;

const response = await bedrockClient.send(new InvokeModelCommand({

modelId: 'anthropic.claude-3-sonnet-20240229-v1:0',

contentType: 'application/json',

accept: 'application/json',

body: JSON.stringify({

anthropic_version: 'bedrock-2023-05-31',

max_tokens: 4096,

messages: [

{

role: 'user',

content: prompt

}

]

})

}));

const responseBody = JSON.parse(new TextDecoder().decode(response.body));

return JSON.parse(responseBody.content[0].text);

}

// Store task state in DynamoDB

private async storeTaskState(taskId: string, itemType: string, data: any) {

const item: TaskStateItem = {

taskId,

itemType,

data: JSON.stringify(data),

timestamp: Date.now()

};

await docClient.send(new PutCommand({

TableName: TASK_TABLE_NAME,

Item: item

}));

}

// Publish events to EventBridge to request similar contracts and legal references

async publishContractAnalysisEvents(taskId: string, contractId: string, contractAnalysis: any) {

// Extract key terms for similar contract search

const keyTerms = contractAnalysis.key_clauses.map((clause: any) => clause.title);

// Publish event to request similar contracts

await eventBridgeClient.send(new PutEventsCommand({

Entries: [

{

EventBusName: EVENT_BUS_NAME,

Source: 'com.legalcontract.agent',

DetailType: 'SimilarContractsRequested',

Detail: JSON.stringify({

taskId,

contractId,

keyTerms,

limit: 5

} as SimilarContractsRequestedDetail)

}

]

}));

// Publish event to request legal references

await eventBridgeClient.send(new PutEventsCommand({

Entries: [

{

EventBusName: EVENT_BUS_NAME,

Source: 'com.legalcontract.agent',

DetailType: 'LegalReferencesRequested',

Detail: JSON.stringify({

taskId,

contractId,

clauses: contractAnalysis.key_clauses.map((clause: any) => ({

id: clause.id,

text: clause.text

}))

} as LegalReferencesRequestedDetail)

}

]

}));

}

// Compile final results into a contract relationship graph

private compileResults(

contractId: string,

contractAnalysis: any,

similarContracts: any[],

legalReferences: any[]

): ContractRelationshipGraph {

return {

contract: {

id: contractId,

title: contractAnalysis.title,

parties: contractAnalysis.parties,

date: contractAnalysis.date,

key_clauses: contractAnalysis.key_clauses

},

related_contracts: similarContracts.map(contract => ({

id: contract.id,

title: contract.title,

similarity_score: contract.similarityScore,

relationship_type: contract.relationshipType,

key_differences: contract.keyDifferences

})),

related_laws: legalReferences.map(law => ({

id: law.id,

title: law.title,

jurisdiction: law.jurisdiction,

relevance_score: law.relevanceScore,

summary: law.summary,

citation: law.citation

}))

};

}

// Publish completion event

private async publishCompletionEvent(taskId: string, contractId: string, result: ContractRelationshipGraph) {

await eventBridgeClient.send(new PutEventsCommand({

Entries: [

{

EventBusName: EVENT_BUS_NAME,

Source: 'com.legalcontract.agent',

DetailType: 'ContractAnalysisCompleted',

Detail: JSON.stringify({

taskId,

contractId,

result

} as ContractAnalysisCompletedDetail)

}

]

}));

}

}

// Start the agent when this file is executed directly

if (require.main === module) {

new LegalContractAgent();

}

Document Retrieval Agent Implementation

The Document Retrieval Agent is implemented as an AWS Lambda function that responds to SimilarContractsRequested events from EventBridge via SQS. It uses the MCP protocol to interact with a document repository, searching for contracts similar to the one being analyzed. When it finds similar contracts, it stores them in DynamoDB and publishes a SimilarContractsFound event back to EventBridge. This agent demonstrates how to use Secrets Manager to securely store and retrieve API credentials and how to implement the MCP protocol for external API access.

// Document Retrieval Agent Implementation

import { Context, Handler } from 'aws-lambda';

import { EventBridgeClient, PutEventsCommand } from '@aws-sdk/client-eventbridge';

import { DynamoDBClient } from '@aws-sdk/client-dynamodb';

import { DynamoDBDocumentClient, PutCommand } from '@aws-sdk/lib-dynamodb';

import { SecretsManagerClient, GetSecretValueCommand } from '@aws-sdk/client-secrets-manager';

import axios from 'axios';

import {

SQSMessage,

EventBridgeEvent,

SimilarContractsRequestedDetail,

SimilarContractsFoundDetail,

SimilarContract,

TaskStateItem

} from './types';

// Initialize AWS clients

const eventBridgeClient = new EventBridgeClient({ region: 'us-east-1' });

const dynamoClient = new DynamoDBClient({ region: 'us-east-1' });

const docClient = DynamoDBDocumentClient.from(dynamoClient);

const secretsClient = new SecretsManagerClient({ region: 'us-east-1' });

// Configuration constants

const EVENT_BUS_NAME = 'LegalContractAnalysisEventBus';

const TASK_TABLE_NAME = 'LegalContractTaskState';

const DOCUMENT_ERP_API_SECRET_NAME = process.env.DOCUMENT_ERP_API_SECRET_NAME || 'document-erp-api-credentials';

// MCP Protocol client for Document ERP API

class MCPClient {

private baseUrl: string;

private apiKey: string;

constructor(baseUrl: string, apiKey: string) {

this.baseUrl = baseUrl;

this.apiKey = apiKey;

}

// Register this Lambda function as an MCP tool

async registerTool() {

try {

await axios.post(

`${this.baseUrl}/tools/register`,

{

name: 'document_retrieval',

description: 'Retrieves similar contracts from document repository',

version: '1.0.0',

authentication: {

type: 'api_key',

key: this.apiKey

}

},

{

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${this.apiKey}`

}

}

);

console.log('Successfully registered as MCP tool');

} catch (error) {

console.error('Failed to register as MCP tool:', error);

}

}

// Search for similar contracts using MCP protocol

async searchSimilarContracts(keyTerms: string[], limit: number): Promise<SimilarContract[]> {

try {

const response = await axios.post(

`${this.baseUrl}/search/contracts`,

{

key_terms: keyTerms,

limit: limit

},

{

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${this.apiKey}`

}

}

);

return response.data.contracts.map((contract: any) => ({

id: contract.id,

title: contract.title,

similarityScore: contract.similarity_score,

relationshipType: this.determineRelationshipType(contract),

keyDifferences: contract.key_differences || []

}));

} catch (error) {

console.error('Error searching for similar contracts:', error);

return [];

}

}

// Determine relationship type based on contract metadata

private determineRelationshipType(contract: any): string {

if (contract.is_predecessor) return 'predecessor';

if (contract.is_successor) return 'successor';

if (contract.is_template) return 'template';

if (contract.is_amendment) return 'amendment';

return 'similar';

}

}

// Get API credentials from Secrets Manager

async function getApiCredentials(): Promise<{ baseUrl: string; apiKey: string }> {

try {

const response = await secretsClient.send(new GetSecretValueCommand({

SecretId: DOCUMENT_ERP_API_SECRET_NAME

}));

const secretData = JSON.parse(response.SecretString || '{}');

return {

baseUrl: secretData.baseUrl || process.env.DOCUMENT_ERP_API_URL || 'http://localhost:3001',

apiKey: secretData.apiKey || process.env.DOCUMENT_ERP_API_KEY || 'mock-api-key'

};

} catch (error) {

console.error('Error getting API credentials from Secrets Manager:', error);

return {

baseUrl: process.env.DOCUMENT_ERP_API_URL || 'http://localhost:3001',

apiKey: process.env.DOCUMENT_ERP_API_KEY || 'mock-api-key'

};

}

}

// Store task state in DynamoDB

async function storeTaskState(taskId: string, itemType: string, data: any) {

const item: TaskStateItem = {

taskId,

itemType,

data: JSON.stringify(data),

timestamp: Date.now()

};

await docClient.send(new PutCommand({

TableName: TASK_TABLE_NAME,

Item: item

}));

}

// Lambda handler for processing SQS messages containing SimilarContractsRequested events

export const handler: Handler<SQSMessage<EventBridgeEvent<SimilarContractsRequestedDetail>>[], void> = async (

event: SQSMessage<EventBridgeEvent<SimilarContractsRequestedDetail>>[],

context: Context

) => {

console.log('Received event:', JSON.stringify(event, null, 2));

// Get API credentials

const credentials = await getApiCredentials();

const mcpClient = new MCPClient(credentials.baseUrl, credentials.apiKey);

// Register as MCP tool on cold start (in production, this would be done during deployment)

if (!process.env.AWS_LAMBDA_FUNCTION_VERSION || process.env.AWS_LAMBDA_FUNCTION_VERSION === '$LATEST') {

await mcpClient.registerTool();

}

// Process each message

for (const record of event) {

try {

const body = JSON.parse(record.body);

const { taskId, contractId, keyTerms, limit } = body.detail;

// Search for similar contracts using MCP protocol

const similarContracts = await mcpClient.searchSimilarContracts(keyTerms, limit);

// Store results in DynamoDB

await storeTaskState(taskId, "similar_contracts", similarContracts);

// Publish results back to EventBridge

await eventBridgeClient.send(new PutEventsCommand({

Entries: [

{

EventBusName: EVENT_BUS_NAME,

Source: 'com.documentretrieval.agent',

DetailType: 'SimilarContractsFound',

Detail: JSON.stringify({

taskId,

contractId,

similarContracts

} as SimilarContractsFoundDetail)

}

]

}));

console.log('Successfully published SimilarContractsFound event');

} catch (error) {

console.error('Error processing event:', error);

}

}

};

// A2A Protocol implementation for Document Retrieval Agent

// This would be exposed via API Gateway in a real implementation

export const getAgentCard = async () => {

return {

name: 'Document Retrieval Agent',

description: 'Searches for and retrieves similar contracts from document repository',

version: '1.0.0',

capabilities: ['document_search', 'similarity_analysis'],

endpoint: process.env.AGENT_ENDPOINT || 'https://api.example.com/document-retrieval',

authentication: {

type: 'aws_iam'

}

};

};

Legal Knowledge Base Agent Implementation

Similarly, the Legal Knowledge Base Agent is implemented as an AWS Lambda function that responds to LegalReferencesRequested events from EventBridge via SQS. It uses the MCP protocol to interact with a legal database, finding laws and regulations relevant to the contract clauses. It extracts legal topics from the clauses, queries the legal database for each topic, deduplicates and sorts the results by relevance, and publishes them back to EventBridge. This agent shows how to implement complex business logic within a serverless function.

// Legal Knowledge Base Agent Implementation

import { Context, Handler } from 'aws-lambda';

import { EventBridgeClient, PutEventsCommand } from '@aws-sdk/client-eventbridge';

import { DynamoDBClient } from '@aws-sdk/client-dynamodb';

import { DynamoDBDocumentClient, PutCommand } from '@aws-sdk/lib-dynamodb';

import { SecretsManagerClient, GetSecretValueCommand } from '@aws-sdk/client-secrets-manager';

import axios from 'axios';

import {

SQSMessage,

EventBridgeEvent,

LegalReferencesRequestedDetail,

LegalReferencesFoundDetail,

LegalReference,

TaskStateItem

} from './types';

// Initialize AWS clients

const eventBridgeClient = new EventBridgeClient({ region: 'us-east-1' }) ;

const dynamoClient = new DynamoDBClient({ region: 'us-east-1' });

const docClient = DynamoDBDocumentClient.from(dynamoClient);

const secretsClient = new SecretsManagerClient({ region: 'us-east-1' });

// Configuration constants

const EVENT_BUS_NAME = 'LegalContractAnalysisEventBus';

const TASK_TABLE_NAME = 'LegalContractTaskState';

const LAWS_STORAGE_API_SECRET_NAME = process.env.LAWS_STORAGE_API_SECRET_NAME || 'laws-storage-api-credentials';

// MCP Protocol client for Laws Storage API

class MCPClient {

private baseUrl: string;

private apiKey: string;

constructor(baseUrl: string, apiKey: string) {

this.baseUrl = baseUrl;

this.apiKey = apiKey;

}

// Register this Lambda function as an MCP tool

async registerTool() {

try {

await axios.post(

`${this.baseUrl}/tools/register`,

{

name: 'legal_knowledge_base',

description: 'Retrieves laws and regulations related to contract clauses',

version: '1.0.0',

authentication: {

type: 'api_key',

key: this.apiKey

}

},

{

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${this.apiKey}`

}

}

);

console.log('Successfully registered as MCP tool');

} catch (error) {

console.error('Failed to register as MCP tool:', error);

}

}

// Search for relevant legal references using MCP protocol

async findLegalReferences(clauses: { id: string; text: string }[]): Promise<LegalReference[]> {

try {

// Extract key legal topics from clauses

const legalTopics = this.extractLegalTopics(clauses);

// Query the legal database for each topic

const legalReferences: LegalReference[] = [];

for (const topic of legalTopics) {

const response = await axios.post(

`${this.baseUrl}/search/laws`,

{

topic: topic,

limit: 3 // Get top 3 most relevant laws per topic

},

{

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${this.apiKey}`

}

}

);

// Add results to the collection

legalReferences.push(...response.data.laws.map((law: any) => ({

id: law.id,

title: law.title,

jurisdiction: law.jurisdiction,

relevanceScore: law.relevance_score,

summary: law.summary,

citation: law.citation

})));

}

// Sort by relevance and remove duplicates

return this.deduplicateAndSort(legalReferences);

} catch (error) {

console.error('Error finding legal references:', error);

return [];

}

}

// Extract key legal topics from contract clauses

private extractLegalTopics(clauses: { id: string; text: string }[]): string[] {

// In a real implementation, this would use NLP to extract legal topics

// For this example, we'll use a simple keyword-based approach

const legalTopicKeywords: Record<string, string[]> = {

'payment': ['payment', 'compensation', 'fee', 'invoice'],

'liability': ['liability', 'indemnification', 'indemnify', 'damage'],

'termination': ['termination', 'terminate', 'cancel', 'end'],

'confidentiality': ['confidential', 'confidentiality', 'secret', 'disclosure'],

'intellectual_property': ['intellectual property', 'copyright', 'patent', 'trademark']

};

const topics = new Set<string>();

for (const clause of clauses) {

const lowerText = clause.text.toLowerCase();

for (const [topic, keywords] of Object.entries(legalTopicKeywords)) {

if (keywords.some(keyword => lowerText.includes(keyword))) {

topics.add(topic);

}

}

}

return Array.from(topics);

}

// Deduplicate and sort legal references by relevance

private deduplicateAndSort(references: LegalReference[]): LegalReference[] {

// Remove duplicates based on ID

const uniqueReferences = references.reduce((acc, current) => {

const existingRef = acc.find(ref => ref.id === current.id);

if (!existingRef) {

acc.push(current);

} else if (current.relevanceScore > existingRef.relevanceScore) {

// Keep the one with higher relevance score

acc = acc.filter(ref => ref.id !== current.id);

acc.push(current);

}

return acc;

}, [] as LegalReference[]);

// Sort by relevance score (descending)

return uniqueReferences.sort((a, b) => b.relevanceScore - a.relevanceScore);

}

}

// Get API credentials from Secrets Manager

async function getApiCredentials(): Promise<{ baseUrl: string; apiKey: string }> {

try {

const response = await secretsClient.send(new GetSecretValueCommand({

SecretId: LAWS_STORAGE_API_SECRET_NAME

}));

const secretData = JSON.parse(response.SecretString || '{}');

return {

baseUrl: secretData.baseUrl || process.env.LAWS_STORAGE_API_URL || 'http://localhost:3002',

apiKey: secretData.apiKey || process.env.LAWS_STORAGE_API_KEY || 'mock-api-key'

};

} catch (error) {

console.error('Error getting API credentials from Secrets Manager:', error);

return {

baseUrl: process.env.LAWS_STORAGE_API_URL || 'http://localhost:3002',

apiKey: process.env.LAWS_STORAGE_API_KEY || 'mock-api-key'

};

}

}

// Store task state in DynamoDB

async function storeTaskState(taskId: string, itemType: string, data: any) {

const item: TaskStateItem = {

taskId,

itemType,

data: JSON.stringify(data),

timestamp: Date.now()

};

await docClient.send(new PutCommand({

TableName: TASK_TABLE_NAME,

Item: item

}));

}

// Lambda handler for processing SQS messages containing LegalReferencesRequested events

export const handler: Handler<SQSMessage<EventBridgeEvent<LegalReferencesRequestedDetail>>[], void> = async (

event: SQSMessage<EventBridgeEvent<LegalReferencesRequestedDetail>>[],

context: Context

) => {

console.log('Received event:', JSON.stringify(event, null, 2));

// Get API credentials

const credentials = await getApiCredentials();

const mcpClient = new MCPClient(credentials.baseUrl, credentials.apiKey);

// Register as MCP tool on cold start (in production, this would be done during deployment)

if (!process.env.AWS_LAMBDA_FUNCTION_VERSION || process.env.AWS_LAMBDA_FUNCTION_VERSION === '$LATEST') {

await mcpClient.registerTool();

}

// Process each message

for (const record of event) {

try {

const body = JSON.parse(record.body);

const { taskId, contractId, clauses } = body.detail;

// Find relevant legal references using MCP protocol

const legalReferences = await mcpClient.findLegalReferences(clauses);

// Store results in DynamoDB

await storeTaskState(taskId, "legal_references", legalReferences);

// Publish results back to EventBridge

await eventBridgeClient.send(new PutEventsCommand({

Entries: [

{

EventBusName: EVENT_BUS_NAME,

Source: 'com.legalknowledgebase.agent',

DetailType: 'LegalReferencesFound',

Detail: JSON.stringify({

taskId,

contractId,

legalReferences

} as LegalReferencesFoundDetail)

}

]

}));

console.log('Successfully published LegalReferencesFound event');

} catch (error) {

console.error('Error processing event:', error);

}

}

};

// A2A Protocol implementation for Legal Knowledge Base Agent

// This would be exposed via API Gateway in a real implementation

export const getAgentCard = async () => {

return {

name: 'Legal Knowledge Base Agent',

description: 'Retrieves laws and regulations related to contract clauses',

version: '1.0.0',

capabilities: ['legal_reference', 'regulatory_compliance'],

endpoint: process.env.AGENT_ENDPOINT || 'https://api.example.com/legal-knowledge-base',

authentication: {

type: 'aws_iam'

}

};

};

To orchestrate the workflow between these agents, we use AWS Step Functions. Our workflow definition includes steps for analyzing the contract, waiting for document retrieval and legal references, checking if results are ready, and compiling the final results. Step Functions manages the state transitions and ensures all steps are completed successfully, with appropriate error handling and retry logic.

Finally, we use AWS CDK to define and deploy our infrastructure. Our CDK stack creates all the necessary AWS resources: EventBridge event bus, SQS queues, DynamoDB table, Secrets Manager secrets, IAM roles, Lambda functions, VPC, ECS cluster, Fargate task definition and service, Step Functions state machine, and API Gateway. This infrastructure-as-code approach ensures that our environment is consistent, reproducible, and version-controlled.

The code examples in this article demonstrate how to implement each component in TypeScript. They show how to use the AWS SDK to interact with various AWS services, implement the A2A protocol, use the MCP protocol for external API access, and tie everything together into a cohesive system. While the examples are focused on a legal contract analysis system, the patterns and techniques can be applied to any agent-based system.

Authentication and Security Considerations

Security is critical to any agent ecosystem, especially when dealing with sensitive legal documents. Our system leverages AWS's robust security features to ensure that all components are properly authenticated and authorized and that all data is protected. AWS provides robust identity and access management (IAM) capabilities that we leverage to secure our agent ecosystem. Each component (Lambda functions, Fargate tasks, Step Functions) has a specific IAM role with least-privilege permissions, granting access only to the resources it needs. IAM roles enable secure access between AWS services without sharing credentials, using temporary security credentials that are automatically rotated. EventBridge policies control which services can publish/subscribe to specific event patterns, ensuring that only authorized components can participate in the event flow. Resource-based policies for DynamoDB, SQS, and other services restrict access to specific actions and resources.

For managing API keys and other sensitive credentials, we use AWS Secrets Manager to store them securely with automatic rotation. A non-sensitive configuration is passed via environment variables, and Parameter Store can be used for configuration values that don't require the security of Secrets Manager.

For A2A protocol authentication, we use IAM roles to authenticate agents when they communicate via the A2A protocol, implement JWT or IAM authorization for API Gateway endpoints, and sign requests between agents to ensure authenticity.

To protect sensitive contract data, all data stored in DynamoDB, SQS, and other services is encrypted at rest, all communication between components uses TLS for encryption in transit, Fargate tasks run in a private VPC for network isolation, and CloudTrail and CloudWatch logs track all access to sensitive resources.

Advantages of Serverless EDAA

Implementing A2A with AWS EventBridge, SQS, Lambda, Fargate, and other serverless components offers several advantages over traditional approaches. Regarding scalability, Lambda functions and Fargate containers scale automatically based on demand, there's no need to provision or manage servers, the system can handle spikes in traffic without pre-provisioning resources, and each agent can scale independently based on its specific workload.

Cost efficiency is another significant advantage. With a pay-per-use model, you only pay for the compute resources you use. There are no costs for idle capacity during low-traffic periods, reduced operational overhead means less time spent on infrastructure management, and granular billing ensures you pay only for the exact resources consumed by each component.

Operational simplicity is also a significant benefit. AWS handles the underlying infrastructure, patching, and maintenance; CloudWatch provides metrics, logs, and alarms out of the box; infrastructure as code allows you to deploy and update your entire stack with a single command; and the reduced operational burden lets you focus on business logic rather than infrastructure management.

Reliability and resilience are built into the architecture. AWS services are designed to be highly available with built-in redundancy. SQS and Step Functions provide automatic retry capabilities, dead-letter queues capture failed messages for later analysis and reprocessing, and you can deploy across multiple AWS regions for disaster recovery.

Finally, this approach enables incredible development velocity. You can focus on business logic rather than infrastructure, test individual components in isolation, update components independently without affecting the entire system, and reduce cognitive load by focusing on smaller, more manageable pieces of functionality.

Conclusion

Combining Google's A2A protocol with AWS serverless services creates a robust foundation for building scalable, event-driven agent architectures. By leveraging EventBridge, SQS, Lambda, Fargate, Step Functions, and other AWS services, we can create flexible and cost-effective agent ecosystems.

The legal contract analysis system we've built demonstrates how specialized agents can collaborate through events to create something more significant than the sum of their parts. Each agent focuses on its core competency—contract analysis, document retrieval, or legal knowledge—while the event-driven architecture handles communication and coordination.

This approach offers several key benefits: decoupling allows agents to evolve independently without affecting others, scalability ensures the system scales automatically to handle varying workloads, resilience means failures in one component don't bring down the entire system, visibility ensures all events are logged and can be monitored centrally, and cost-efficiency means you pay only for what you use, with no idle resources.

As agent ecosystems grow in complexity, event-driven architectures will become increasingly important. They provide the decoupling, scalability, and visibility needed to manage complex networks of agents, allowing us to build AI systems that are both powerful and maintainable.

Member discussion