Event‑Driven Architecture as the Foundation of Enterprise Autonomy

Large language models gave enterprises impressive linguistic fluency, but fluency alone does not yield autonomous behaviour. Most current deployments remain bound by a request/response execution model. The lag between a business signal and its corresponding action is a structural bottleneck, especially when customer experience is measured in milliseconds. Proactive agents address this by initiating work based on observed events rather than waiting for explicit user intent. By continuously consuming domain‑event streams, an agent can detect anomalous patterns, infer likely causes, and dispatch corrective workflows before the situation becomes visible on a dashboard.

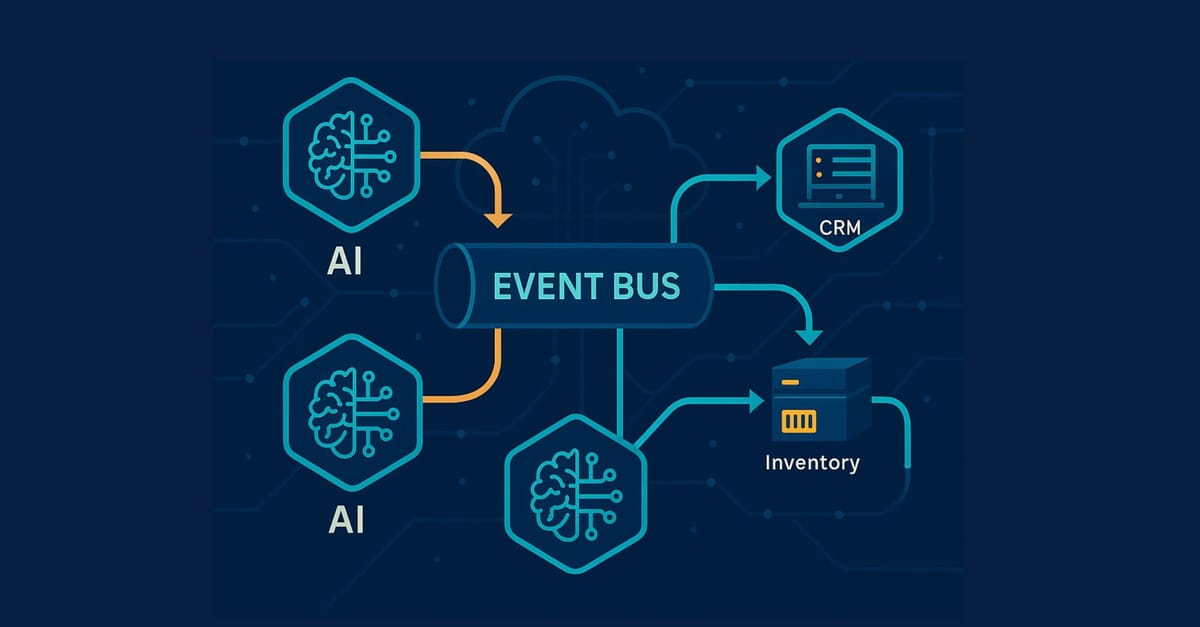

Such immediacy is not achievable in a point‑to‑point architecture. Agents require a substrate where every state mutation is expressed as an immutable event, and where producers and consumers evolve independently. Event‑driven architecture (EDA) satisfies this need. When sales services are emitted inventory_low and logistics services are provided shipment_delayedAn agent interested in supply‑chain resilience merely subscribes to the relevant topics and reacts in near real‑time. Decoupling eliminates back‑pressure loops, isolates faults, and permits horizontal scaling without re‑coordinating clients.

A Layered Reference Stack

A production‑ready agent platform generally materialises across five tightly coupled yet independently deployable layers. The interaction tier exposes agent output through REST, GraphQL, or conversational endpoints; its sole responsibility is marshalling input and output without embedding business logic. Directly beneath, an orchestration tier—implemented with Temporal, AWS Step Functions, or Cadence—binds long‑lived workflows, provides exactly‑once replay semantics, and records deterministic state transitions for audit. The agent tier houses specialised reasoning workers; each worker encapsulates a bounded context, delegates large‑language‑model inference where appropriate, and remains stateless between invocations except for an execution token. These workers rely on a data‑services fabric that surfaces transactional records from ERP systems, vector embeddings from Pinecone or Milvus, and domain APIs exposed over gRPC. All of the above run on an infrastructure foundation that supplies a durable event bus (Kafka, Pulsar, or NATS JetStream), strictly versioned contracts, distributed tracing, secret rotation, and policy enforcement integrated with Open Policy Agent. When the layers conform to this separation of concerns, new capabilities can be introduced by deploying an additional agent rather than refactoring the monolith.

Retail Walk‑Through

Suppose the event bus publishes a surge of order_cancellation_spike. A monitoring agent subscribed to anomaly channels instantiates a planning agent via a synchronous Remote Activity in Temporal. The planner queries historical fulfillment events stored as vector embeddings, recognizes a locality correlation with warehouse A, and emits a compensating reroute_orders command. A fulfillment agent consumes the command, recomputes the distribution using a min‑cost‑max‑flow algorithm, and publishes reroute_complete. The customer‑service UI, subscribed to this event, updates its timeline and notifies operators within minutes—no human coordination ceremony required.

Memory Models and Learning Surfaces

Effective autonomy depends on memory structures extending beyond a single transaction's scope. Working memory captures the active conversational turn or task parameters currently under consideration. Episodic memory retains a timestamped log of past interactions, allowing agents to run causal queries over their behaviour. Semantic memory maps domain artefacts—policies, catalogue data, pricing rules—into dense vector spaces that support similarity search. Procedural memory codifies successful action sequences as parameterised templates that can be replayed when the context exhibits a high cosine similarity to a previous episode. Together, these stores allow an agent to determine whether a perceived anomaly is novel or an instance of a previously solved incident, leading to faster convergence and reduced exploratory cost.

Planning and Execution Contracts

Autonomy collapses without a strict contract between the planner and the executor. The planner decomposes an ambiguous goal, such as "reduce churn," into discrete tasks tagged with cost, risk, and SLA constraints. At the same time, the executor guarantees that each task is carried out exactly once. Idempotent commands are fundamental: if a consumer replays an event after a network partition, the side effects must remain functionally pure. Long‑running transactions rely on the saga pattern with compensating steps to recover from partial failure. Timeouts must be explicit, and escalation paths to human operators should be defined in declarative policy so that operational control planes can adjust thresholds at runtime without code redeployments.

Governance and Observability

Observability evolves from a nicety into a safety requirement in an architecture where code makes decisions without human intervention. Every decision path—including LLM prompt, generation token stream, and deterministic reducer output—should be captured in a trace that can be re‑hydrated for incident analysis. Agents authenticate themselves with short‑lived, auditable credentials issued by SPIFFE/SPIRE and are subject to the same attribute‑based access control that governs human identities. A real‑time inference firewall redacts sensitive entities, enforces cost ceilings, and blocks policy‑violating actions before they leave the enclave. These measures turn compliance discussions from speculative assurances into verifiable facts.

Platform Options and Trade‑Offs

Commercial suites such as Salesforce Einstein, Microsoft Copilot Studio, and ServiceNow Assist integrate connectors, role‑based access control, and regulator‑aligned logging at the expense of black‑box behaviour and limited extension points. Foundation‑model APIs from OpenAI, Anthropic, and Google Gemini expose lower‑level primitives that allow fine‑grained prompt engineering and streaming yet shift the burden of scaling and robust gating onto the adopter. Open‑source frameworks—LangChain, CrewAI, and similar—favour modular experimentation and community velocity but require meticulous hardening around sandboxing, dependency pinning, and side‑effect isolation before they can survive a compliance audit. In heavily regulated verticals, domain‑specific vendors hard‑code lineage capture and pre‑validated workflow templates, accelerating initial deployment while potentially locking teams into a narrower capability surface. Most enterprises blend these approaches: they prototype with open frameworks, operationalise critical paths on a managed platform, and reserve bespoke micro‑agents for workloads that differentiate their business logic.

Comparative Lens: Architectures and Methods for Agentic Development

The landscape of agent construction has matured into three recognisable archetypes, each anchored in different assumptions about initiative, state management, and integration surfaces. The first—and still most common—pattern is the reactive pipeline built around deterministic micro‑services. Here, an LLM, if present, sits at the edge of a request/response chain, enriching queries or post‑processing results but never initiating control flow. Such systems are straightforward to reason about and easy to secure because every invocation is traceable to an authenticated caller. Their weakness is evident under volatility: they cannot observe ambient signals and therefore cannot pre‑empt degradation or opportunity.

A second family relies on tool‑orchestrated multi‑agent collaboration. Frameworks such as AutoGen, LangGraph, or the ReAct pattern wire multiple specialist workers together through a planning loop. One agent decomposes a goal, others invoke external tools, and a central policy loop decides whether to continue iterating or stop. These systems gain breadth—each agent can be swapped or upgraded independently—and exploit chain‑of‑thought prompting for transparent reasoning. Yet they remain fundamentally pull‑based; execution is sparked by an explicit goal object placed on a queue. Latency also grows with every round‑trip through the coordinator, making them ill‑suited for millisecond‑scale control paths.

The emerging third approach is data‑driven proactivity as formalised in Lu et al.'s Proactive Agent work presented at ICLR 2025. Instead of waiting for user intent, the agent is fine‑tuned on real interaction traces and rewarded for predicting the following helpful action. The authors introduce ProactiveBench, a 6 k‑event corpus synthesised from keyboard, mouse, clipboard, and browser telemetry, and demonstrate that a modest 7 B parameter model tuned on this data achieves a 66 % F1‑score in anticipating user tasks—surpassing larger frozen baselines. The training loop includes a reward model that captures human acceptance criteria, reducing false‑positive interventions without suppressing initiative. This style naturally maps onto an event bus in production: every UI click, API exception, or IoT sensor update becomes a training signal and runtime trigger.

From an architectural perspective, the three models can be compared across several axes. Initiative ranges from none (reactive) through partial (tool‑orchestrated) to autonomous (proactive). State coupling evolves from strictly request‑scoped to shared vector memory to continuously learned policies requiring episodic and semantic storage. Latency budgets widen from synchronous RPC latencies to minutes‑long deliberation loops; proactive agents re‑tighten the bounds by attaching directly to the event stream. Governance posture also diverges: reactive systems reuse conventional RBAC; multi‑agent stacks demand fine‑grained tool permissions; proactive runtimes require policy engines that can revoke autonomy at runtime based on risk scores.

The strategic takeaway is that these approaches are not mutually exclusive. High‑throughput, safety‑critical paths—payment settlement, medical device control—still benefit from reactive determinism. Tool‑orchestrated agents excel where the goal is explicit but multi‑step reasoning is costly for humans, such as data‑migration playbooks or compliance report generation. Proactive agents shine in latent‑signal domains: anomaly remediation, sales‑ops nudging, or IT service management, where the cost of missing a window outweighs the risk of an occasional false positive. Mature enterprise stacks will therefore field all three, chaining them through the same event backbone while enforcing a unified audit trail.

Operational Readiness

Before releasing an autonomous workflow into production, engineering teams must verify three dimensions of readiness. Alignment tests validate that the agent's goal hierarchy maps to measurable KPIs. Observability tests inject failure modes—slow consumer, poison‑pill event, LLM rate‑limit breach—and confirm that metrics, traces, and synthetic checks surface the degradation within the agreed response window. Change‑management tests examine whether upstream teams and on‑call engineers trust the automation enough to leave it untouched outside break‑glass scenarios; if operators routinely override agent decisions, the system remains effectively manual.

Interoperability and Policy Futures

As organisations deploy heterogeneous agent fleets, standardisation is emerging around inter‑agent messaging (for example, the Open Agent Protocol), shared ontologies, and secure credential delegation. Policy engines will increasingly shift from static allow‑lists to context‑aware risk scores, gating autonomy levels dynamically based on situational factors such as transaction value, user segment, or regulatory jurisdiction. Continuous assurance tooling—akin to chaos engineering for policy—will inject simulated threats into the runtime to validate that guardrails remain effective as the environment evolves.

Conclusion

Event‑driven architecture provides the temporal resolution, fault isolation, and decoupling required for genuine machine autonomy, while large language models supply the reasoning substrate. When these components are wired together with robust orchestration, memory, and governance layers, enterprises unlock systems that convert raw signals into decisive action at the speed of data propagation. For engineering teams willing to embed these patterns deeply into their architecture, the reward is a platform that identifies problems and executes mitigations before human operators know something changed. In this context, autonomy is not a marketing tagline—it is a measurable reduction in mean time to resolution and an equally measurable increase in engineering leverage.

Member discussion